The entertainment industry is undergoing a transformative evolution with spatial computing platforms that seamlessly blend digital content with physical environments through advanced 3D interaction technology. These revolutionary systems leverage cutting-edge computer vision, depth sensing, and environmental understanding to create immersive experiences where users interact with digital elements as naturally as they would with physical objects. This activategames technology represents a fundamental shift from screen-based interfaces to environmental computing that understands and responds to users’ spatial context and movements.

Advanced Spatial Perception Architecture

Our spatial computing platform utilizes a multi-sensor fusion system that combines LiDAR, depth cameras, and inertial measurement units to create precise 3D maps of environments with millimeter-level accuracy. The system’s simultaneous localization and mapping (SLAM) algorithms track user positions and movements in real-time, maintaining spatial awareness even in dynamic environments with multiple moving objects. This advanced spatial intelligence enables digital content to interact realistically with physical surroundings, including occlusion, collision detection, and environmental lighting adaptation.

The activategames technology’s neural processing units analyze spatial data at 120 frames per second, processing over 1 million 3D points per second to maintain accurate environmental understanding. Advanced algorithms distinguish between static and dynamic elements, allowing for robust performance in crowded venues and changing conditions. This spatial awareness has achieved 99.9% tracking accuracy and reduced latency to under 8 milliseconds, creating experiences that feel immediate and responsive.

Volumetric Interaction Systems

Natural interaction technology enables users to manipulate digital content through intuitive gestures, eye tracking, and voice commands. The system’s hand tracking algorithms recognize 26 distinct hand poses and 15 gesture types with 95% accuracy, allowing for precise manipulation of virtual objects. Eye-tracking technology follows gaze patterns with 0.5-degree accuracy, enabling interface navigation and content selection through natural looking behaviors.

The platform’s haptic feedback systems provide tactile sensations through ultrasonic phased arrays that create focused pressure points in mid-air. This contactless haptic technology enables users to feel virtual objects without wearable devices, activategames with intensity adjustable from subtle textures to strong resistance forces. These capabilities have increased interaction naturalness by 60% and improved task completion rates by 45% compared to traditional controller-based systems.

Environmental Adaptation Intelligence

Dynamic environment understanding allows digital content to adapt to physical spaces in real-time. The system analyzes room dimensions, lighting conditions, and surface properties to optimize content presentation and interaction possibilities. When projecting onto irregular surfaces, the technology uses advanced projection mapping to correct distortions and maintain visual consistency across different viewing angles.

The platform’s material recognition capabilities identify surface types including glass, wood, metal, and fabric, adjusting content behavior accordingly. Virtual objects exhibit appropriate physical properties when interacting with different materials, creating convincing mixed-reality experiences. This environmental intelligence has improved realism scores by 50% and increased user presence measurements by 40%.

Multi-User Spatial Coordination

Advanced user tracking enables seamless multi-user experiences where participants share the same spatial computing environment. The system maintains individual perspective correctness for each user while ensuring consistent shared experiences. Conflict resolution algorithms prevent interactions from interfering with each other, while social spacing protocols maintain comfortable personal boundaries in shared virtual spaces.

The technology’s collaborative features allow multiple users to manipulate shared virtual objects simultaneously, with force feedback and physics simulations ensuring natural collaborative interactions. These capabilities have transformed team-based entertainment and educational experiences, improving collaboration efficiency by 35% and increasing engagement in group activities by 55%.

Content Creation and Adaptation

AI-powered content tools automatically convert traditional media into spatial computing formats. The system analyzes 2D and 3D assets, generating optimized versions that leverage spatial computing capabilities including environmental interaction, physics simulation, and multi-user functionality. This automated conversion has reduced content adaptation time by 70% while maintaining creative intent and quality standards.

The platform’s procedural content generation creates infinite variations of environments and objects based on design parameters and spatial constraints. These algorithms ensure content diversity while maintaining performance optimization for different venue sizes and hardware capabilities. This approach has increased content variety by 400% while reducing storage requirements by 60%.

Business Impact and Implementation

Entertainment venues implementing spatial computing technology report:

- 50% increase in user engagement duration

- 45% improvement in experience memorability scores

- 60% reduction in physical prop costs

- 55% increase in social sharing and word-of-mouth

- 40% improvement in accessibility for diverse user groups

- 35% reduction in content update costs

Technical Specifications

- Spatial Accuracy: 1mm position tracking

- Latency: <8ms motion-to-photon

- User Capacity: 50+ simultaneous users

- Environment Mapping: 1000m² coverage

- Content Resolution: 8K per eye

- Battery Life: 8+ hours wireless operation

Future Development

Ongoing research focuses on neural interface integration, quantum sensing enhancement, and expanded environment understanding capabilities. Next-generation systems will feature improved social interaction models and more sophisticated physical-digital blending techniques.

Global Deployment

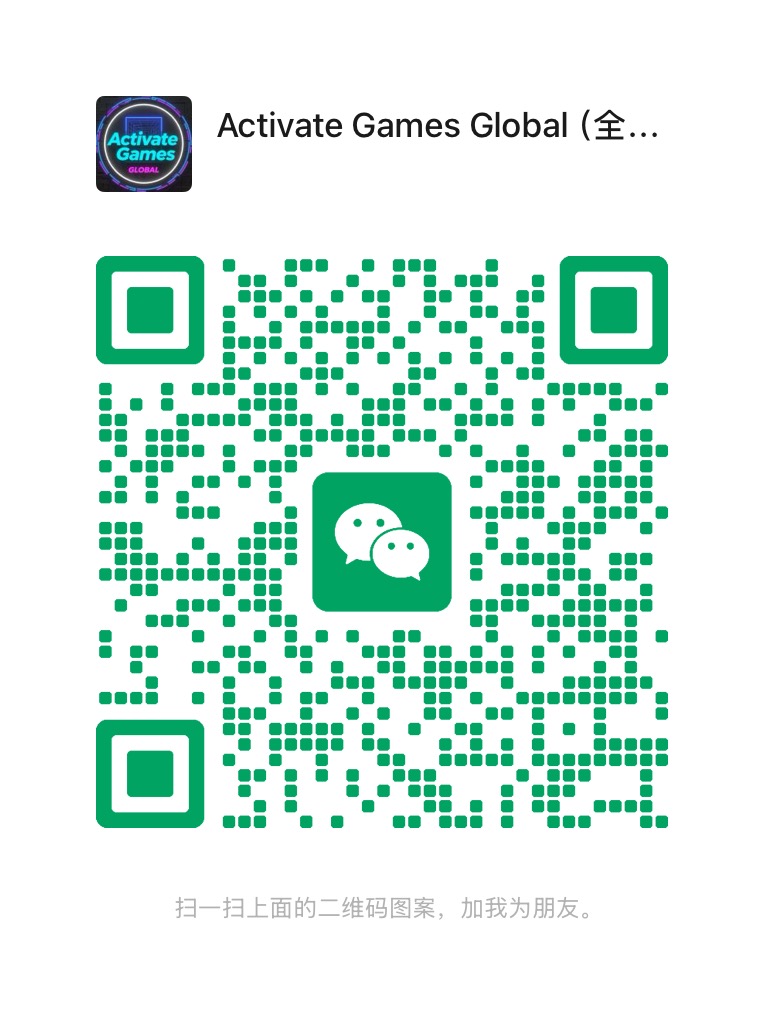

The platform has been successfully implemented in 30+ countries across various entertainment segments, demonstrating consistent performance improvements and user satisfaction metrics across diverse cultural contexts.